Virtual Makeup Assistant - Ethical & Accessible AI

This project is about crafting an accessible and ethical experience, connecting with humans, building trust, and creating a minimal viable product.

The Vision

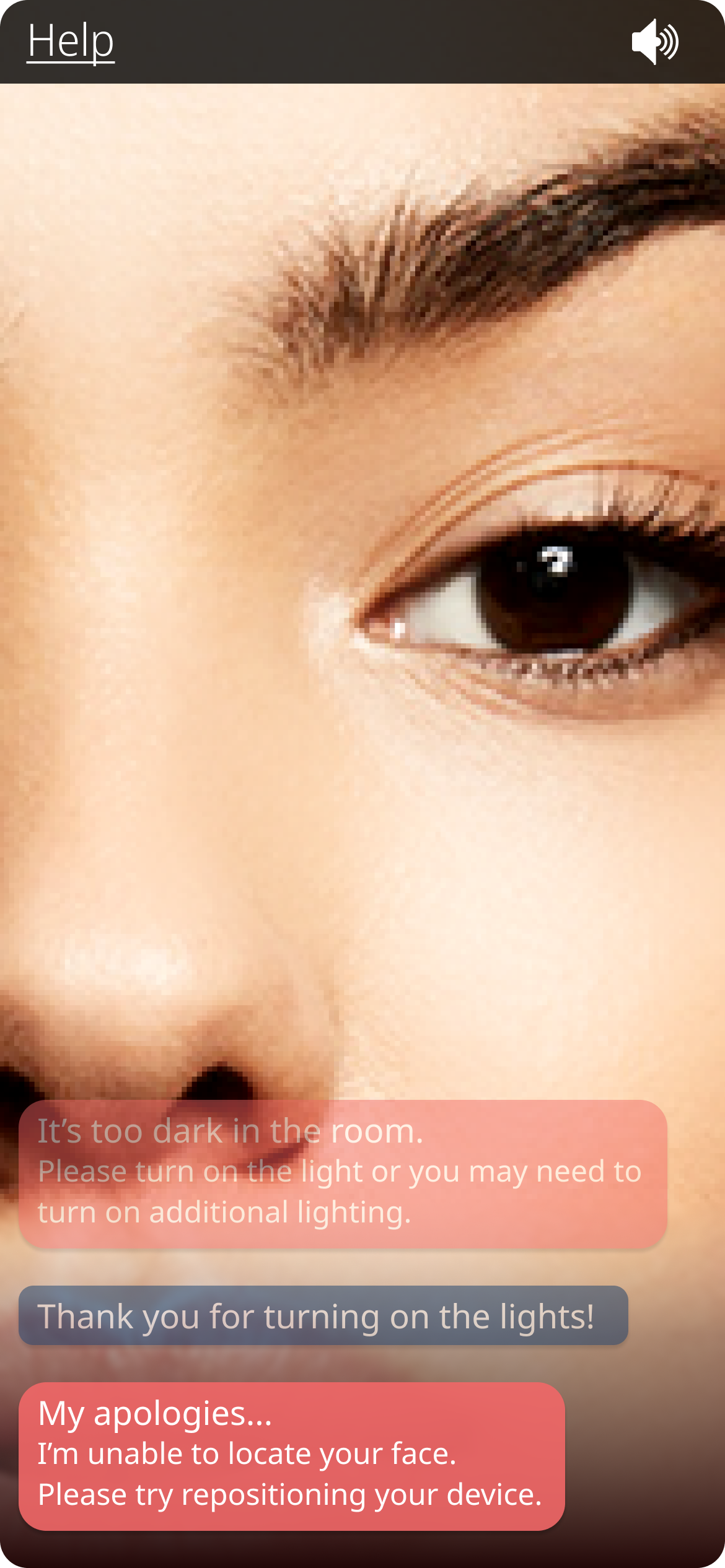

A mobile app that houses an AI assisted makeup assistant, which aids customers living with vision impairment. It can readily provide a '“face check”, give appropriate feedback, and recommendations. In a future state it would be able to recognize products, provide makeup guidance and provide personalize styling recommendations.

For today, the focus is to design and evaluation a proof of concept that revolves around the AI’s ability to identify makeup application and provide feedback on coverage and location. To limit the scope, it will only focus on lipstick application at this time. The goal is to set the foundation. *Pun intended

The Challenges

This project really challenged me to dig deep into designing a customer experience. When the target audience lives visual impairment, the experience goes beyond a usable user interface.

“How can I create a mobile experience when the users have various levels of vision impairment?”

Reaching beyond the UI, I found myself exploring voice UI, text to speech, content strategy in responses, prompts, questions, error navigation, and spatial/environmental considerations.

“How can I create trust between users and a non-visual experience?”

The key was to set realistic expectations, have a clear definition of the capabilities, acknowledge existing flaws, and provide authentic feedback.

“What are additional design considerations at play here that really helps to make an impact?”

I really focused on accessibility, not just in terms of color contrast, and element sizes. To create an accessible experience, I explored experiences interacting with haptic, audio, and visual elements.

My Design Process

Learning & Understanding

Learning from accessibility experts, persons living with vision impairment and business stakeholders to identify key requirements and what kind of experience they are seeking.

Ideating & Problem-solving

I worked closely with the development team to ideate what was feasible for a proof of concept based on our timeline and available resources. I initially drew inspiration from existing popular voice UIs such as Siri, Alexa, & Google Assistant.

Designing & Validating

I incorporated several accessibility considerations and voice strategy into the experience. Validation from an initial first pass with voice assistant and then later with 9 participants with various levels of vision impairment.

Relearning & Reiterating

After testing with participants there were additional things to consider such as positioning of the camera, environmental lighting, and angles that affected the app’s abilities.

My Role & Responsibilities

As a lead UX designer, I played the key role of gathering requirements and translating those into business and customer relevant designs.

I successfully took on the additional role of senior content strategist, as they left the company during this time. Their departure left little to no impact due to my adaptability and skills.

Research

Research can be broken into three sections.

Technical Exploration - I helped to define must-have functionalities based on current feasibility. I also explored text to speech services that would help the development team integrate voice into the app.

Accessibility Considerations - SMEs provided by the clients, my own research using voice assistant, organizations, articles, etc.

Validation - Live app testing via Testflight with 9 vision impaired participants.

“You know how people say, ‘I don’t know how I ever lived without it’? Well, I think this is going to be one of those apps.”

“If she [the app] were my sister, my sister will be very honest with me, my daughters will be very honest with me, because I kind of demand it. And that's what blind people are wanting. They're not wanting somebody to tell you look wonderful. They're just wanting somebody to validate that you've done it correctly. And it looks good.”

“I really like the fact that I don't have to push any buttons, and I can just talk to it. And it turns the camera on by itself. And, you know, there's not a lot of interactions that I need to do other than talking, and that is a really good way for us people that are blind to use applications. So that's a total positive. “

I think well, first of all, the voice is an excellent voice. Whoever put that voice on the application... it's very calming...sounds like I walked into a boutique... that's very good...so many apps I have... it's just a monotone, 'I can't see you, I can't see you'. And when she needed something, it was a very calm, reassuring voice. And that's all I get from the app, is what I hear. So that's very very good.

Design

To design this experience, I had to consider how my users were going to interact with this product.

Audio & Voice

The largest avenue for a majority of the interactions.

Azure Text to Speech

Content Strategy

Tone & Verbiage

Brand Identity

vs. VoiceOver

Visual Aids

Designing for a wide of visual impairment

UI Design

Accessible UI

Other Considerations

How can I improve the experience further?

Haptic feedback

Error Resolution

Functionality (timers, camera, etc)

Unfortunately, I’m not able to show the actual final product due to client requirements. However, the mockup shown up top is similar in style and function.

Impact & Insights

This project is an initial proof of concept that serves as a “lighthouse” for future development and considerations. There were many obstacles to be solved. Designing an experience beyond something that was tangible required a different perspective. It was meaningful to lead the experience and make an impact in an often-times underrepresented population. From the conversations and interviews, I’m glad to see users are excited about ethic technology and how AI can assist them in their day to day lives.

My impacts specifically will serve as a guide, relating to the application’s usage of voice strategy, how errors are handled, and anticipating customer needs.